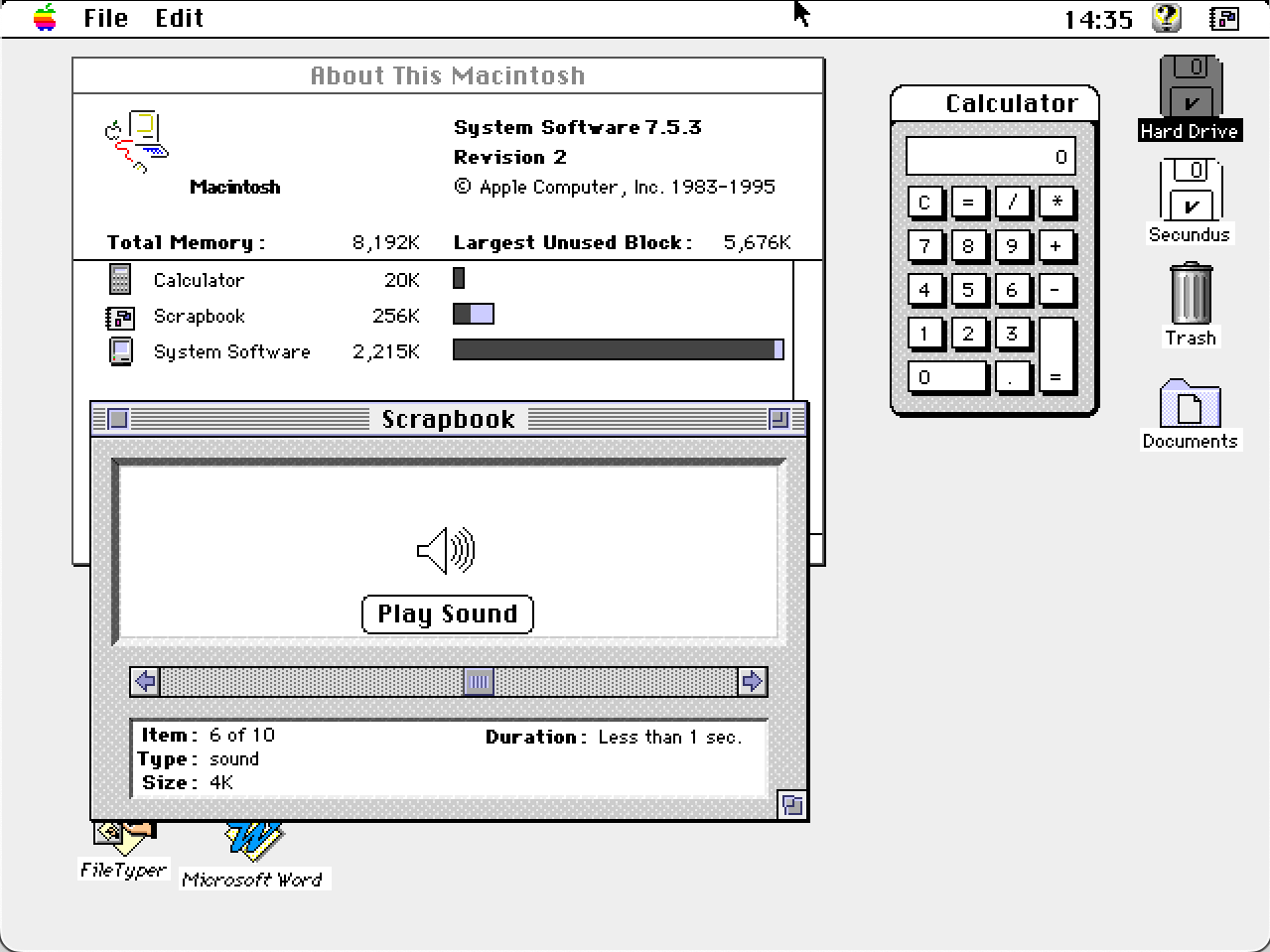

When I was looking into studying computer science, my father did the reasonable thing: give me time to talk with a family friend who was working in computers. At the time I had just discovered, with the Macintosh, a functional graphical interface. There was a thing called Geos for the Commodore 64, but it was not really usable, in particular with a Joystick. The Amiga had the workbench, but it seemed to be mostly used to launch games. So I was really enthusiastic about the concept, but the person I talked to thought that it was a gimmick, that no sensible corporation would pay for the computing power needed to run a graphical environment, when DOS was perfectly fine.

During my professional life I heard many other predictions of the same type:

- 640K ought to be enough for anybody1.

- Only scientists need a floating point unit.

- More than 16/256 colours are superfluous for normal use.

- No work computer will ever need a sound-card.

- What is the point of a DSP in a computer?

- Graphic cards are a thing that will never be useful outside of CAD and games.

- Laptops will never supplant cheaper, more powerful and upgradable desktop computers.

- Internet access is not needed for work.

- Web-cams are a gimmick that will never be necessary for work.

There were some fads, of course, but somehow most of them revolved around 3D visualisation.

It seems pretty clear at this stage that AI is the next big thing, but what does that mean? Bill Gates thinks that AI is as important as the transition to the GUI, I somehow feel that internet and mobile phones had as significant an impact. We can look at the previous big changes in computing:

- Micro-computers gave users access to computers.

- Graphical user interfaces gave users the ability to visualise and interact with data.

- The internet gave users the ability to access and interact with other users far away.

- Smart phone gave users the ability to carry their devices and capture data.

All things were usually available to a small subset of users before the relevant generation/technological change. Some people had computers before the micro-computers arrived, but they were rare. Some people were able to interact before the internet, but again they were a minority.

I think AI will allow users to translate information, this can be between human languages, between media types, i.e. generating pictures from text, or summarise pictures into text, or just some random transformation. This means users probably won’t access the original data that often, but instead a summarisation, a translation or an transformation of it. Or something completely made up. Who needs the internet when an AI can build a special one, just for you?

The thing is, with each transition, there were people and companies that did not grok the new world and typically just ran the old thing in the new paradigm. So for quite some time, the main use of the graphical user interface on Unix systems was just to run terminal windows and Emacs editors in parallel, and there were few, real WYSIWYG tools. It worked, mind-you, but it was not that user-friendly and certainly did not use the full potential of the platform – the virtual terminal is roughly modelled after the DEC VT220 terminal from 1983…

Microsoft did not initially get the Web, the web-browser did not really fit into the office productivity universe, it was more a bizarre mashup of LaTex and Hypercard with some SGML thrown in. In the same way the Unix crowd ran terminals inside X11 windows, the Windows crowd ran ActiveX elements inside web-pages. It worked, mind-you but it was not that secure or cross-platform.

Microsoft also did not get the iPod (who remembers the Zune?) and generally the smart phone revolution. Windows was the center of the Microsoft universe, and so clearly mobile devices had to use some version of it. The last version of Windows CE was released in 2013. Generally, many, including Apple assumed that smart-phones would just run web-pages. You could run your web application inside a mobile web browser, but it was not that user-friendly, and would not use all the new sensors the platforms has to offer.

So we are now at the point where the general idea seems to be let’s run neural networks inside classical server side web-apps: like web search or chat. I think it can work, mind you, but these things typically work better with context, so the latency and the privacy won’t be that great. Also, what will be the point of a browsable web if its content will be accessed either through apps or large language models? Why ship the whole stuff in human readable form, and not in latent space…

I honestly don’t know what the next thing will be. Maybe we will finally see the Anti-Mac user-interface. Maybe we will finally get the Cyberspace from Cyberpunk, that would be cool, but probably useless. My hunch is that it will be something around delegation and mediation: after 20 years of social networking and pretending you can bring your whole self to work, I could see my phone polishing my communications to others, and adapting and filtering these of others. AI is already used to make pictures look pretty, it could be used to pretend that I’m young, sexy and I care. Maybe also handle some boring conversations. Or it could be an improved medical assistant and coach, a direction Apple seems to be taking. We will see…

One thought on “The next big thing”